Face-Mask Detection With CNN and Transfer Learning (Which Is a Better Approach ?)

Introduction:

CNN (Convolutional Neuron Network) is a deep learning neural network. It is particularly effective in finding patterns in images and performing various tasks, such as image classification, object detection, and segmentation. Structurally, a CNN composes of an convolutional layer, pooling layer and fully-connected layer. These layers perform filtering operations to learn and extract spatial hierarchies of features from raw input data and complete different computer vision functions. Moreover, when working with CNN, users also have an option to employ pre-trained models that have been developed successfully by other experts to speed up the training process or to optimize the model accuracy. Pre-trained models such as GoogLeNet and AlexNet have been proven to be a good starting point for solving related deep-learning applications.

For the final semester project in my AI class, I want to utilize CNN and Transfer Learning to explore the concept of deep learning. In specific, I want to develop an AI model to detect masks in a dataset that consists of 12,000 different images. The goal is not only to successfully perform whole image classification in pixels to detect 2 groups: images of people who wear masks and images of people who do not; but also, to determine if a self-developed CNN can better pre-trained models that are out there and readily available for people to use. Everything is built and modified through Keras, high-level neural networks API written in Python.

Dataset:

The data source is obtained from a public website named Kaggle. The dataset has about 12,000 images. And, the images of people wearing face masks are scrapped from Google Search, while the images of people not wearing one are compiled and reprocessed from the CelebFace dataset created by Jessica Li. Sources:

- https://www.kaggle.com/datasets/ashishjangra27/face-mask-12k-images-dataset — complete dataset.

- https://www.kaggle.com/jessicali9530 — Jessica Li’s dataset

Moreover, the images are divided into 3 folders including Test data, Train data and Validation data. In those images, people are depicted with various facial expressions and emotions. They are both male/female and from multiple cultural backgrounds. Incidentally, a small number of images is cartoon instead of actual person.

- Train data: 5000 — images with masks | 5000 — images without masks

- Test data: 483 — images with masks | 509 — images without masks

- Validation data: 400 —images with masks

400 — images without masks.

Two CNNs would be developed to compare and contrast with other pre-trained models. The first CNN would be a really basic one and has no supporting hyperparameters. On the other hand, the second CNN would include different supportive parameters such as striding, batch normalization, pooling, etc. In terms of pre-trained models, InceptionV3, MobileNet_V2 and VGG16 would be employed, trained and evaluated.

Objectives and Methods:

>> The goal is to produce intelligent models to detect when facemasks are being worn properly in pictures.

>> How could authorities take advantage of similar studies to generate statistical data that will help control contagious diseases?

>> Which models are the best suited for this data?

>> How does our model compare to Transfer Learning Models?

>> What are some improvements could be made to obtain better results and accuracy?

Processes and Insights:

I. CNN without Supporting Hyperparameters

First, I have to import necessary packages for the model to run successfully . They are keras, sequential, plot. After that, I define the model with number of classes equal to 2 since there are two groups of images need to segmented. For the convolution layers, sigmoid is used as the activation function and input shape is kept consistent at (200, 200, and 3). At deeper layers, I attempt to improve the model by gradually decreasing the filter size and increasing the number of filters. At the very last layer, I could not forget to add flatten and SoftMax, which allow the model to perform classification task.

The results come back after 2 hours of training. Loss averages at almost 70% while accuracy is at 48%. Validation loss also averages at almost 70% and validation accuracy is at 50%. I also build a CFM for this model with class 0 and class 1 have very different values. For class 0 (images of people without masks), the recall is at 100% while class 1 (images of people with masks) is at 0. The same thing happens for F1 value. Based on these numbers, this is not a good model and I highly recommend staying away from it.

II. CNN with Supporting Parameters

For the second CNN model, the convolution layers would look drastically different from the previous one. This is because it would involve various hyperparameters for the purpose of fine tuning the network and making it more efficient. The hyperparameters mentioned here are Dense, Dropout, Maxpooling2D, Batch Normalization and Conv2D. I use relu as an activation function this time but still keep the input shape at (200, 200 and 3). I also gradually decrease the filter size and increase the number of filters at deeper layers. When arriving at the last layer, flatten and SoftMax are again introduced. A complete convolution layers are as follows

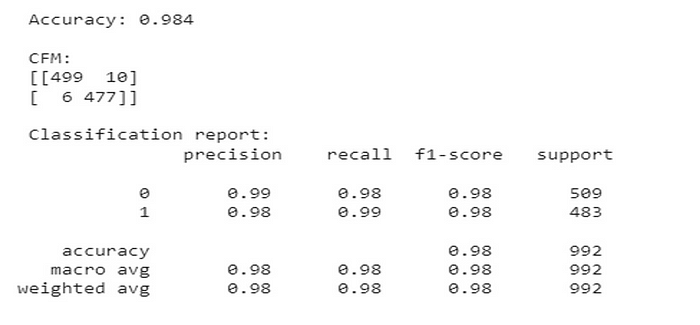

As expected, the results from this model are way better than the previous one. With supporting hyperparameters, the training process only takes 30 minutes to complete and the average accuracy is at about 98%; while average loss is only at approximately 25% over 10 epochs. Looking at its CFM, the precision, recall and F1 score for both classes stay at 98–99%. It helps to signify that the model has successfully and effectively classified images into groups of mask-wearing and unmask-wearing images.

III. Transfer Learning Models with InceptionV3, MobileNetV2 and VGG16

To set up these pre-trained models, initially, I have to remove their fully connected layers and replace them with my own layer that has an output dimension equal to the number of classes in the image dataset. The new model, then, would be complied and fitted to allow the learning to happen across all layers and new weights to be updated on the new fully connected layer. Overfitting is not a big concern since the higher features are not being removed from these pre-trained networks. The steps are as follows (noted, these steps are demonstrated for InceptionV3 but the codes are applied the same for the other two models).

All models take about 20 minutes to complete the training process and their accuracy are significantly high. Specifically, MobileNetV2 reaches up to 99.4% accuracy; whereas, the accuracy values of InceptionV3 and VGG16 are at 99.80% and 99.50% respectively. These models indeed have shown the ability to classify images of people wearing mask and people who don’t. Below are their accuracy and loss graphs. From their CFMs, everything converges to 1 for both classes, suggesting that the transfer learning is done successfully.

Conclusion and Final Thoughts:

The strategy of building and designing my own CNN network from scratch requires more effort and computational power than reusing a retrained net. For this image dataset, the Transfer Learning approach is preferrable solution since all the pre-trained networks that I use end up producing better accuracy than my own CNN model, with or without supporting hyperparameters. Moreover, the Transfer Learning takes less time to train because it does not need a high-performance CPU like self-developed CNN does. Overall, the MobileNetV2 is the best model with the accuracy of 99.89%; whereas, CNN that lacks essential hyperparameters is the worst solution with the accuracy of only 48.69%. Regarding these results, it can be said Transfer Learning is a better and more powerful technique in the field of patterns detection and images classification. However, I do not want to merely rely on one project to discourage others from designing and training their own CNN. Because in a number of empirical researches that has been done, a higher accuracy is obtained when a custom CNN is successfully constructed.

Thanks for reading through and please let me know your thought on whether or not Transfer Learning is better than designing CNN !!!